Merentang Takdir

April 12, 2020

Tulisan 13 Desember 2013.

Merentang Takdir

Rabu lalu, tanggal 4 Desember 2013. Hari itu hari melelahkan; tapi saya sungguh bahagia dengan hari itu, semua terkerjakan. Pekerjaan, sekolah, rumah. Hari Kamis, ternyata ada pendarahan, ringan. Namun semakin larut hari, semakin hebat. Jum at dini hari, saya pun kehilangan bayi saya.

Bayi yang tidak sempat saya beritahukan kepada khalayak, karena menunggu ruhnya ditiupkan, untuk kemudian didoakan bersama; agar dia menjadi bagian dari karunia-Nya di alam ini. Saya berencana mengadakan syukuran 4 bulanan, mengundang siapapun yang bisa diundang, untuk berbagi kebahagiaan dan memohon doa akan keselamatan. Tapi syukuran itu tak pernah terjadi. Read the rest of this entry »

Kampus Merdeka

January 29, 2020

Sudah lama tidak menulis di sini. Microblogging via Social Media memang lebih memudahkan, mudah dipost dan mudah mendapatkan feedback, serta mudah untuk dishare. Tapi begitu banyak hal yang harus dicatat, karena kapasitas memory (otak) dan penyimpanan di storage semakin terbatas. Baiklah. Kamis manis hari untuk menulis.

Kali ini saya akan sharing tentang Kampus Merdeka, gagasan dari mas Mentri Nadiem Makarim. Saya mulai dengan slide Kampus Merdeka dari beliau, dan beberapa catatan tambahan dari pribadi. Lalu dilanjut dengan pembahasan Permendikbud 2020, nanti.

Nama saya Ayi

September 23, 2019

Nama saya Ayi. Ayi Purbasari. Dari namanya, terlihat bahwa saya wanita sunda asli ☺. Iya, sunda. Itu lho, suku murah senyum alias someah; yang suka dimention dalam wejangan para ortu yang anaknya merantau ke ITB:

“Jangan mau sama ce Sunda”.

😳

Konsep Pemrograman Berorientasi Objek (1)

January 2, 2019

Mempelajari konsep Objek oriented tidak berarti semata-mata mempelajari bahasa pemrogramannya saja. Idealnya, dengan memahami konsep Object Ortiented, maka bahasa apapun dapat dijadikan tools untuk memperjelas konsep tersebut. Adakalah, kita langsung mempraktekkan bahasa pemrograman, tanpa memahami konsep OO yang terkadung di dalamnya. Tulisan beriku tini merangkum kembali konsep OO yang harus dikuasai dengan baik oleh seorang pemrogram.

Terdapat 5 materi besar dalam mempelajari Pemrograman Beroientasi Objek. Yaitu:

- Kelas dan Objek

- Interaksi antar Objek

- Relasi antar Objek

- Collection

- Advance topics:

- Abstract dan Interface

- Polimorphism

- Static dan Final

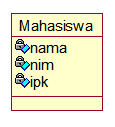

Menurut Grady Booch, objek adalah benda; yang memiliki id, state, dan behavior. State adalah kumpulan value untuk setiap atribut yang menempel pada objek tersebut. Sedangkan behavior menunjukkan perilaku objek, direpresentasikan dengan metode/fungsi yang dapat mengubah state. Sebagai contoh, kelas Student/Mahasiswa adalah objek. Mahasiswa memilik atribut nama dan nomor induk serta IPK. Mahasiswa memiliki perilaku belajar agar value dari IPK dapat berubah.

Kelas digambarkan sebagai template atau cetak biru dari sebuah objek. Kelas menggambarkan struktur dari sebuah objek. Template atau cetak biru ini menjadi dasar pembuatan objek. Objek yang berasal dari Kelas yang sama, akan memiliki atribut dan metode/fungsi yang sama. DIkatakan bahwa objek adalah instansiasi dari Kelas.

Mengacu ke notasi Unified Modeling Language (UML), kelas digambarkan dalam bentuk diagram sebagai berikut. Terdiri dari 3 area. Area pertama menunjukkan Nama Kelas, area kedua menunjukkan kumpulan atribut, dan area ketiga menunjukkan kumpulan metode/fungsi

Kelas Mahasiswa, notasi UM

L

Berdasarkan digram kelas tersebut, kita dapat melakukan pemrograman dengan bahasa Java sebagai berikut:

public class Mahasiswa {

String nama;

String nim;

Double ipk;}

Sedangkan di bahasa PHP, didapat kode sebagai berikut:

class Mahasiswa {

private $nama;

private $nim;

private $ipk;}

Sedangkan dalam bahasa Pyhton:

class Mahasiswa:

nama = “”

nim = “”

ipk = 0

Info Beasiswa 2019

January 1, 2019

Terlampir:

Hokkaido University Summer Program 2019, Japan (Fully Funded)

Deadline: 28 February 2019

Info: https://bit.ly/2QRrjPv

StuNed Program Short Course Scholarship for Indonesian Citizen 2019, Netherlands

Deadline:27 January 2019

Info: https://bit.ly/2rxz9Q2

Korea University International Summer Campus 2019, South Korea (Partial Funded)

Deadline: 15 May 2019

Info: https://bit.ly/2Qt4Clu

ACU Summer School 2019 at University of Mauritius [Fully Funded]

Deadline: 13 January 2019

Info: https://bit.ly/2KS8UN4

CERN Senior Fellowship Programme 2019 [Fully Funded] in Switzerland

Deadline: 04 March 2019

info: https://bit.ly/2RxU0y3

ETH Internship 2019 [Fully Funded] Summer Internship in Switzerland

Deadline: 31 December 2018

Info: https://bit.ly/2Pif2Po

UTSIP Japan Summer Internship 2019 Kashiwa [Fully Funded]

Deadline: 31 January 2019

Info: https://bit.ly/2FW8TsL

University of Tokyo Summer Internship Program Japan 2019 /UTokyo Amgen Scholars Program 2019 [Fully Funded]

Deadline: 01 February 2019

Info: https://bit.ly/2QxMYvR

Asian Graduate Student Fellowships 2019, Singapore

Deadline: 15 December 2019

Info: https://bit.ly/2UhFufx

CERN Junior Fellowship Programme 2019 [Fully Funded] in Switzerland

Deadline: 04 March 2019

Info: https://bit.ly/2EdduoB

UNIL Summer Internship in Switzerland 2019 [Fully Funded]

Deadline: 20 January 2019

Info:https://bit.ly/2AU1OmD

CERN Short Term Internships in Switzerland 2019 [Fully Funded] in Switzerland

Deadline: Different Deadlines for Each Program

Info: https://bit.ly/2QCrQVw

CERN Openlab Summer Program 2019 [Fully Funded] in Switzerland

Deadline:31 January 2019

Info: https://bit.ly/2APE1nS

UTRIP Summer Internship in Japan 2019 at University of Tokyo [Fully Funded]

Deadline: 10 January 2019

Info: https://bit.ly/2AJYGtu

Japan Internship Program 2019 at Okinawa Institute of Science and Technology [Fully Funded]

Deadline: Next Deadline is 28 February 2018

Info:https://bit.ly/2UaRnnC

ASEAN-INDIA RESEARCH TRAINING FELLOWSHIP (AI-RTF), India

Deadline: 31 December 2018

Info: https://bit.ly/2DVi4ai

Future Global Leader Fellowship (Fully Funded), USA

Deadline: 31 January 2019

Info :https://bit.ly/2QwtvMa

Fully Funded Masters and Training Programmes in Belgium (150 Scholarships)

Deadline: 11 January 2019 and 08 February 2019

Info: https://bit.ly/2AIyGid

Young Leaders Access Program 2019 Leadership Program, USA (Middle East)

Deadline: 02 January 2019

Info: https://bit.ly/2PewNPk

2019 MEPI Student Leaders Program,USA [Fully Funded]

Deadline: 31 December 2018

Info: https://bit.ly/2KLzo2V

Young Leaders Access Program 2019, USA (All Country)

Deadline: 02 January 2019

Info: https://bit.ly/2RvdwuX

The New dance WEB Scholarship Program for EU and Non-EU Students in IMPULSTANZ

Deadline: 9 January 2019

Info: https://bit.ly/2DVxr33

Fully Funded for Journalist Fellowship in University of Oxford

Deadline: 11 February 2019

Info: https://bit.ly/2Aen6v7